UX Certification

Get hands-on practice in all the key areas of UX and prepare for the BCS Foundation Certificate.

Every usability professional knows that Morae is a useful tool for running a software or web usability test. But did you know you could also use it to dramatically speed up the time it takes to do a heuristic evaluation? This 'How do I…' article gives you step-by-step instructions on how to carry out an expert review with Morae, complete with explanatory screen shots.

Jakob Nielsen introduced the notion of "discount usability" in the early 1990s. Discount usability served as an antidote to the received wisdom (amongst developers at least) that usability takes forever and costs too much money. Discount usability introduced three key techniques that aimed to simplify methods of data collection:

The cost of Nielsen's first discount usability method, usability testing, has been greatly reduced by Techsmith's Morae software, first released in 2004. Morae is a suite of tools that allow you to record all your computer activity, including screen, camera, audio, mouse and keyboard activity.

I've spent a lot of time using Morae for usability testing. Sometimes, when we get to user number 10 and we're encountering the same usability problem yet again, my mind wanders. Perhaps it's the hum of the air conditioning or the darkened room. Whatever. During one of these sessions it occurred to me that Morae could be used to support the other two components of discount usability: low fidelity prototyping and heuristic evaluation. In this article I describe how to use it for heuristic evaluation — I'll talk about paper prototyping in a follow-up article.

Heuristic evaluation is a key component of the discount usability movement introduced by Jacob Nielsen. In Nielsen's own words:

"Heuristic evaluation is a usability engineering method for finding the usability problems in a user interface design so that they can be attended to as part of an iterative design process. Heuristic evaluation involves having a small set of evaluators examine the interface and judge its compliance with recognized usability principles (the "heuristics"). — From "How to Conduct a Heuristic Evaluation".

Heuristic evaluations are bread-and-butter work for virtually every usability professional but not everyone follows Nielsen's suggestion that 3-5 evaluators should assess the interface. Nielsen arrived at this figure after assessing a voice mail user interface with 19 evaluators, when he found that some usability problems are found by very few evaluators. The reason some people tend not to follow this advice is simply because of the sheer amount of extra work involved. Although each expert may spend just 1-2 hours assessing the interface, each expert then needs to write up his or her notes so that the lead evaluator can understand the problem and this overhead can often take the rest of the day. Multiply this by 3-5 evaluators and add on extra time to compile the results and present the findings and you have a process that can take a week to complete: no quicker than running a usability test.

By using Morae to streamline this process you can slash the review time to a single day and have the data analysed and the report written in Microsoft Word in 1-2 more days. The process is as follows:

Let's go through each of these steps in turn.

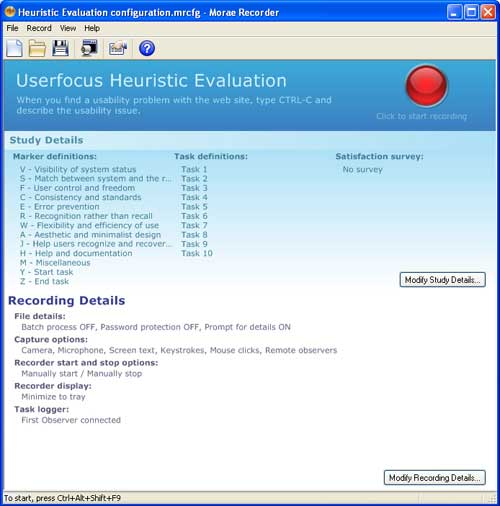

The first step is to design a suitable configuration file in Morae Recorder. You'll want each reviewer to use this file so that you have a consistent set of markers that you can apply to each recording. Figure 1 shows a screenshot from a configuration file that is suitable for a traditional, Nielsen evaluation. (We've also added a "miscellaneous" category to cover issues that don't easily fit into any of Nielsen's categories). Of course, if you use a different set of heuristics (like ISO's dialogue principles) you'll want to replace these heuristics with your favoured set.

Figure 1: Marker definitions tab in the Morae Study Setup window. The definitions match the heuristics that you'll use in the review.

Your reviewers won't need to use the markers that are shown in Figure 1; all they need to know is to press "CTRL-C" whenever they spot a usability problem. Give each reviewer these instructions:

"Please carry out your review as you would normally. But every time you spot a usability problem I want you to press "CTRL-C" on the keyboard and describe the usability problem. "CTRL-C" acts as a marker for me so I can review your session and fast forward to the usability issues. There's no need for you to speak at other points during the review since I won't listen to the entire session. But it is important that you clearly describe each usability problem for me so I can categorise it and write it in the report."

(If you're using Morae 3.1's Wii Remote to log issues, adapt the instructions accordingly). For complex interaction problems it might also be worth labouring the point that after evaluators spot a problem, they do a perfect re-run to trigger that issue and then add their CTRL-C / Wii Remote marker. This makes it easier for the lead evaluator to understand the issue and often saves a few emails or phone calls to the evaluator. You could also create a video clip of this re-run to demonstrate the issue to the development team.

When your reviewers open the configuration file, they'll see something like Figure 2.

Figure 2: The Morae configuration file seen by each reviewer.

Why do we ask reviewers to identify the point when they find a usability problem with CTRL-C? The actual keystroke is unimportant: the main consideration is to choose a keystroke that won't interfere with normal use of the application (CTRL-W is probably a bad choice). Although CTRL-C might be used by our reviewers to copy text in the browser this is a low frequency activity; and if they do use this keystroke unintentionally we'll soon know when we review the session, so we can ignore it. If the software that you're reviewing requires lots of CTRL-C text copying during the review, just replace this keystroke with something that won't interfere as much. If your reviewers use the Wii Remote to log issues then you don;t need to worry about any conflicts.

Time required: Assuming each evaluator spends an average of 90 minutes on their review, the review component of the heuristic evaluation can be completed by 5 evaluators in a single day.

In this step, you'll import all of the recordings into Morae Manager and identify the issues.

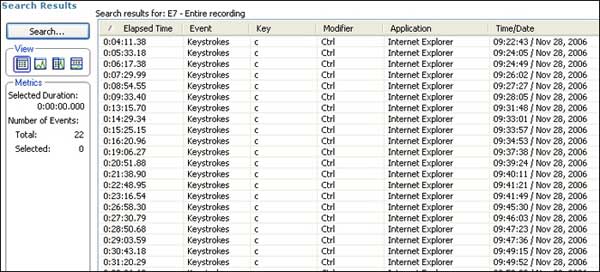

Starting with the first evaluator's recording, search for all of the markers added with the Wii Remote or for all the CTRL-C keystrokes. (You find keystrokes by selecting the "Search..." button in the "Analyze" tab and then by specifying the search in the "Search Editor" dialog box). The search results window will now list all of the points during the recording where the evaluator flagged a usability problem (see Figure 3).

Figure 3: The search results screen from Morae Manager after a completed session.

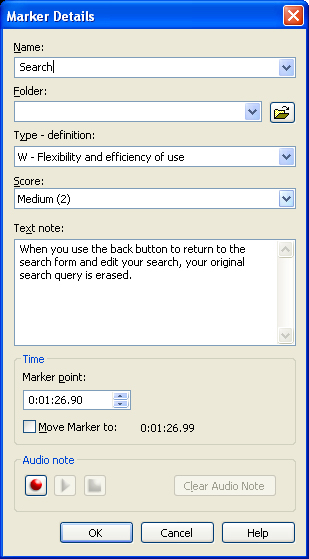

Now you work through each usability issue in turn. Double-click the first item in the search results and listen to the reviewer's comment. Then add a marker and summarise the comment. Most of the items in this dialog are self-explanatory (see Figure 4), although we tend to use the "Name" field to describe the location in the user interface where the issue was found.

Figure 4: The marker details screen in Morae Manager. This is where the lead reviewer describes each usability issue.

As you work through the files, you'll notice that some usability problems were spotted by multiple evaluators. Duplicate problems can be safely ignored so we don't add a marker here, we just move onto the next usability issue.

If you want to include screenshots in the final report, take a screenshot every time you note a usability issue. With Morae, you do this by selecting the marker and then choosing "File>Save>Screen Frame..." To make sure you can find the correct screenshot later, be sure to give it a name that relates to the reviewer and the usability problem number, for example "E1-16.jpg" for usability problem 16 found by the first expert reviewer.

Time required: About 2 minutes per usability problem (about half-a-day for a typical review). Add another half-day if you want to include screenshots.

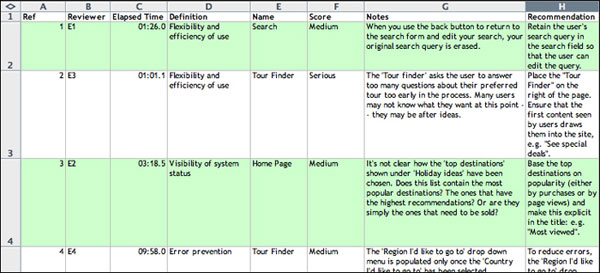

In this step, you export each set of markers for each evaluator and then import them into Excel.

The export process is very straightforward. Simply carry out a search in Morae Manager, but this time search for "All markers and tasks" (this is the default search in Morae). The search results window will now list all of the markers that you set for that evaluator. You now right-click on the search results window, select "Export Results..." and then save the results as a .csv file to your desktop. Repeat this step for each of your evaluators.

You should now have five .csv files on the desktop (one per evaluator, assuming you used five evaluators). Launch Excel and open the first file. (don't double-click the file. Excel is a bit clueless and will only launch its "Text Import Wizard" if you open the file from within Excel. If you don't go through this Wizard, Excel treats the commas as part of the file rather than as a delimiter).

After opening the file, we then add a column to identify the reviewer. For example, for the first reviewer we add a column to the left of the results and type "E1" next to each of the usability issues. This is useful later on because it helps us identify the reviewer who first spotted the issue.

We now repeat this for each reviewer and then cut and paste the results so that all of the findings are in a single worksheet. Then we add an additional column at the far left that we call "Ref." which just contains a sequential number for each usability issue (so that it can be uniquely referenced). Then we add a column at the far right that we call "Recommendation".

Finally, we clean up the worksheet by removing most of the columns that aren't needed. We delete the "Marker Type" column, the "Event" column, the "Creator" column, the "Created in" column, the "Time/Date" column and the "Action" column. This leaves us with 8 columns in our spreadsheet: "Ref." and "Reviewer" (two columns that we added); "Elapsed time", "Definition", "Name", "Notes" and "Score" from Morae; and finally a column labelled "Recommendation" that we added. Figure 5 shows an example.

Figure 5: Once the markers have been exported, you can import them into Excel and add extra information.

We then work through each of the usability issues in turn, adding a recommendation for each usability problem.

Time required: About 30 minutes of file management plus about 2 hours to write the recommendations.

For some teams, the Excel file may be a sufficient report on its own. If so, you've managed to complete an expert review with 5 reviewers in less than 2 days. Congratulations! However, if you now want to format the results so they look nice and pretty you can do this lightening fast by using Microsoft Word's mailmerge feature.

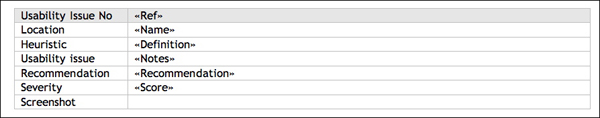

Simply set up a template along the lines shown in Figure 6 with your own company's branding and then set the mailmerge in action. Note that the mailmerge fields in Figure 6 correspond to the headings in the top row of the Excel file in Figure 5.

Figure 6: A mailmerge template for a simple usability report.

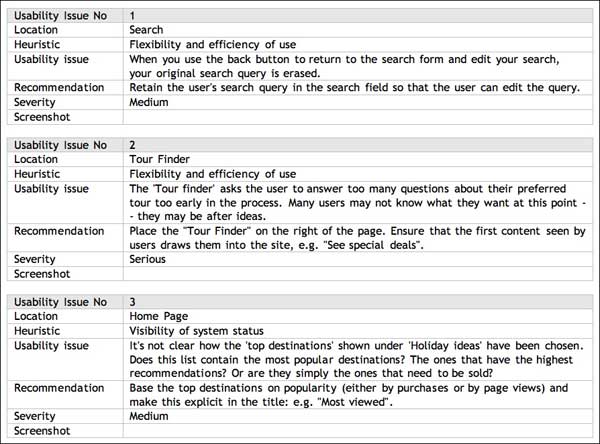

The final step is to generate your report. Simply complete the mailmerge and watch your report write itself. Figure 7 shows the kind of results you can expect. Use the "Screenshot" column to paste in any relevant screen captures from the user interface.

Figure 7: How the provisional usability report looks once the mailmerge is completed.

Time required: 30 mins to design the template and set up the mailmerge plus a couple of hours to edit and paste in the screenshots.

So there you have it. A heuristic evaluation with five reviewers completed and written up in under 3 days.

Thanks to Philip Hodgson, Miles Hunter, Phillip Gardiner and Bill Wessel for comments on earlier drafts of this article.

Dr. David Travis (@userfocus on Twitter) is a User Experience Strategist. He has worked in the fields of human factors, usability and user experience since 1989 and has published two books on usability. David helps both large firms and start ups connect with their customers and bring business ideas to market. If you like his articles, why not join the thousands of other people taking his free online user experience course?

Gain hands-on practice in all the key areas of UX while you prepare for the BCS Foundation Certificate in User Experience. More details

This how-to article shows you how to exploit the full functionality of Morae when carrying out a paper prototype test. Paper Prototyping with Morae.

Targeted at both new and experienced users of Morae, "Morae for Usability Practitioners" is a step-by-step guide to using Morae to plan, execute and analyse usability tests. Packed with insider tips and expert advice, this guide will help you use Morae to its full potential. Morae eBook.

This article is tagged expert review, heuristic evaluation, morae.

Our most recent videos

Our most recent articles

Let us help you create great customer experiences.

We run regular training courses in usability and UX.

Join our community of UX professionals who get their user experience training from Userfocus. See our curriculum.

copyright © Userfocus 2021.

Get hands-on practice in all the key areas of UX and prepare for the BCS Foundation Certificate.

We can tailor our user research and design courses to address the specific issues facing your development team.

Users don't always know what they want and their opinions can be unreliable — so we help you get behind your users' behaviour.